L4S impact on Quality of Application Outcome

January 5, 2023

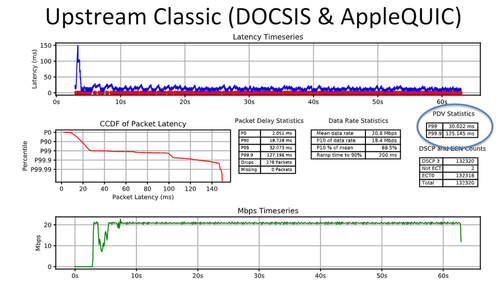

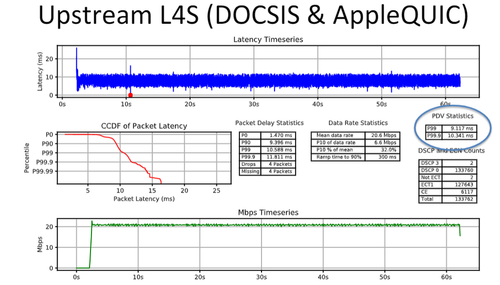

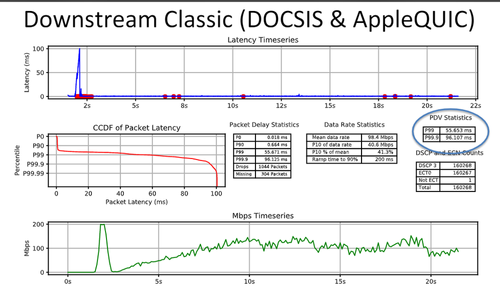

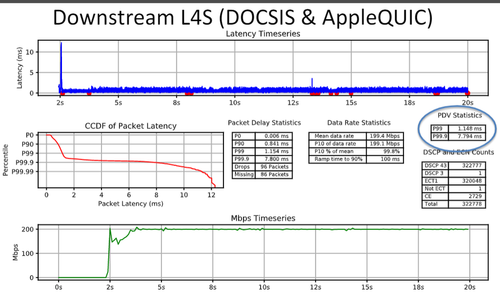

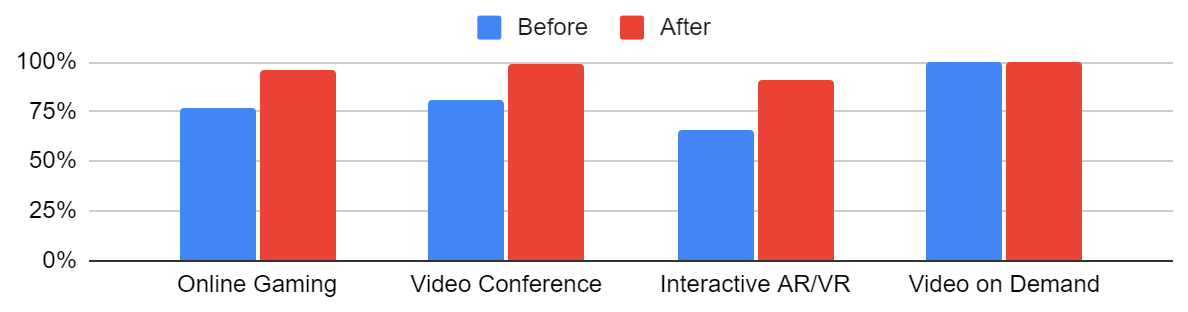

Have you heard about L4S (Low Latency, Low Loss, Scalable Throughput)? This is a new IETF standard that promises to drastically improve latency. The first results from an interop testing was done at the IETF-114 hackathon in July 2022, and below you can see the results.

Before: After:

Awesome! Right?

Or, are these results a bit hard to read and understand? Is there a more understandable way to present it?

What does reducing the access networks downstream 99.9th percentile working latency from 96.107ms to 7.794ms mean for you and the applications you are using?

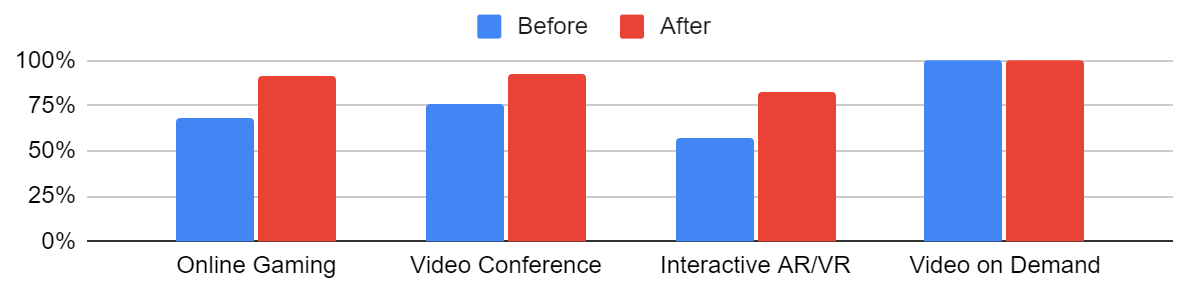

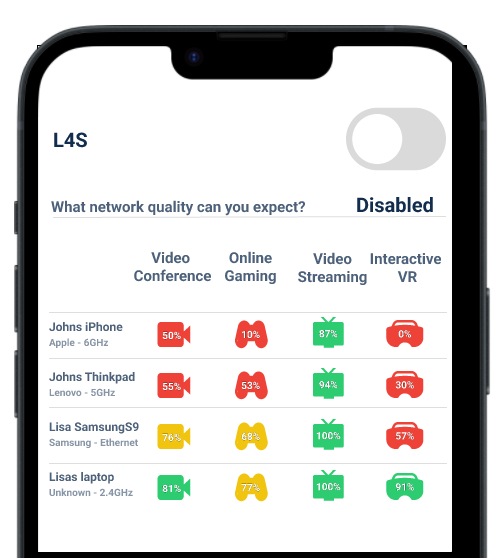

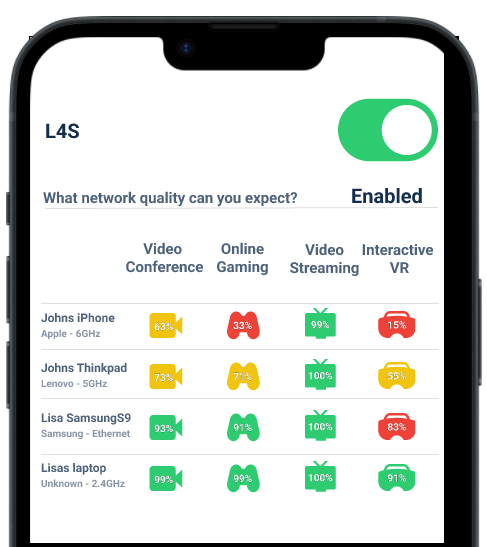

This blog post presents the results from the L4S plugfest in theQuality of Outcome (QoO) frameworkwith network requirements from common applications classes (Video Conferencing, Online Gaming, Video on Demand and interactive VR). The results show that enabling L4S will improve network quality and have a significant impact on the outcome of applications (smoother video conferences, more tactile gaming, etc.) for many end-users.

See what L4S can do for you in this GIF

First a bit about the QoO Framework

The Quality of Outcome framework (working docoment) isa new proposed standard that:

Defines a way of measuring network quality that includes all aspects of latency

Defines a way of setting sophisticated network requirement that includes all aspects of latency

A formula for comparing the measured network quality (1) and network requirements (2) and calculating the probability that network requirements were met.

Sounds complicated? What the framework gives you is the possibility of going from highly complex measurements and statistics to statements like:

John’s Laptop has a 99.9% likelihood of perfect video conferencing quality on this network

A very useful feature of the QoO framework in this context is that the measurements are (de)composable. That means that there is a statistically meaningful way to add and subtract segments of the network to calculate the end-to-end quality.

The composability means we can take the results from the L4S interop meeting, which looked at one part of the overall network, and add them to real data to calculate the impact on the outcome of applications. I.e., we can look at what the impact of adding L4S would be like for real devices and applications.

The L4S results

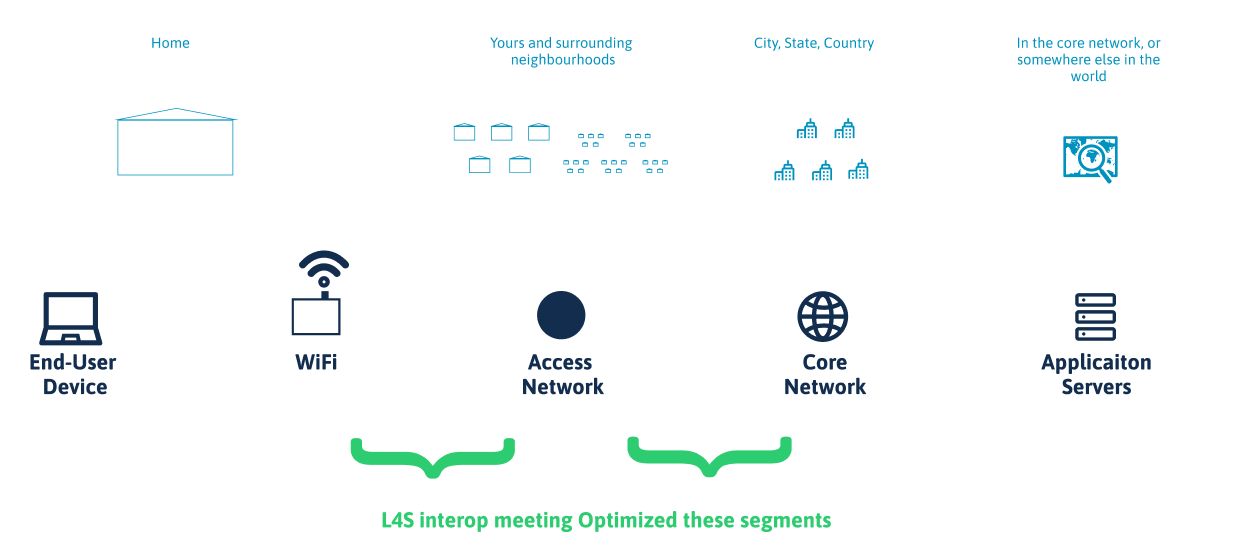

At the plugfest, the participants tested how L4S will reduce the latency at load for a DOCSIS (hybrid-fiber coaxial cable) access and core network.

To illustrate where the optimization is in the grand scheme of a packet traveling from your computer to a server, we can say that they optimized the access and core network. A common (not perfect) way of splitting the a residential broadband network is by separating it into:

The home

The access network

The ISP’s core network

From the core to the application servers (which may be in the core network, or somewhere else)*

.png)

Calculating the probability of outcomes

The network requirements are made by Domos to represent typical performance for Video Conferencing, Online Gaming, Video Streaming, and interactive VR. Sufficient bandwidth is necessary, but not sufficient for a good application outcome. Some applications are sensitive to high peaks of latency, while others are more sensitive to high average latency, while others are more sensitive to highly variable latency. All of this is taken into account in the QoO framework.

Domos Latency Management monitors all aspects of latency from Home WiFi Routers. This includes monitoring latency separately within the home WiFi/Ethernet, over any access network (fiber/cable/5G/xDSL), within the core of the ISP, and for round trip times to the servers accessed by the end-user.

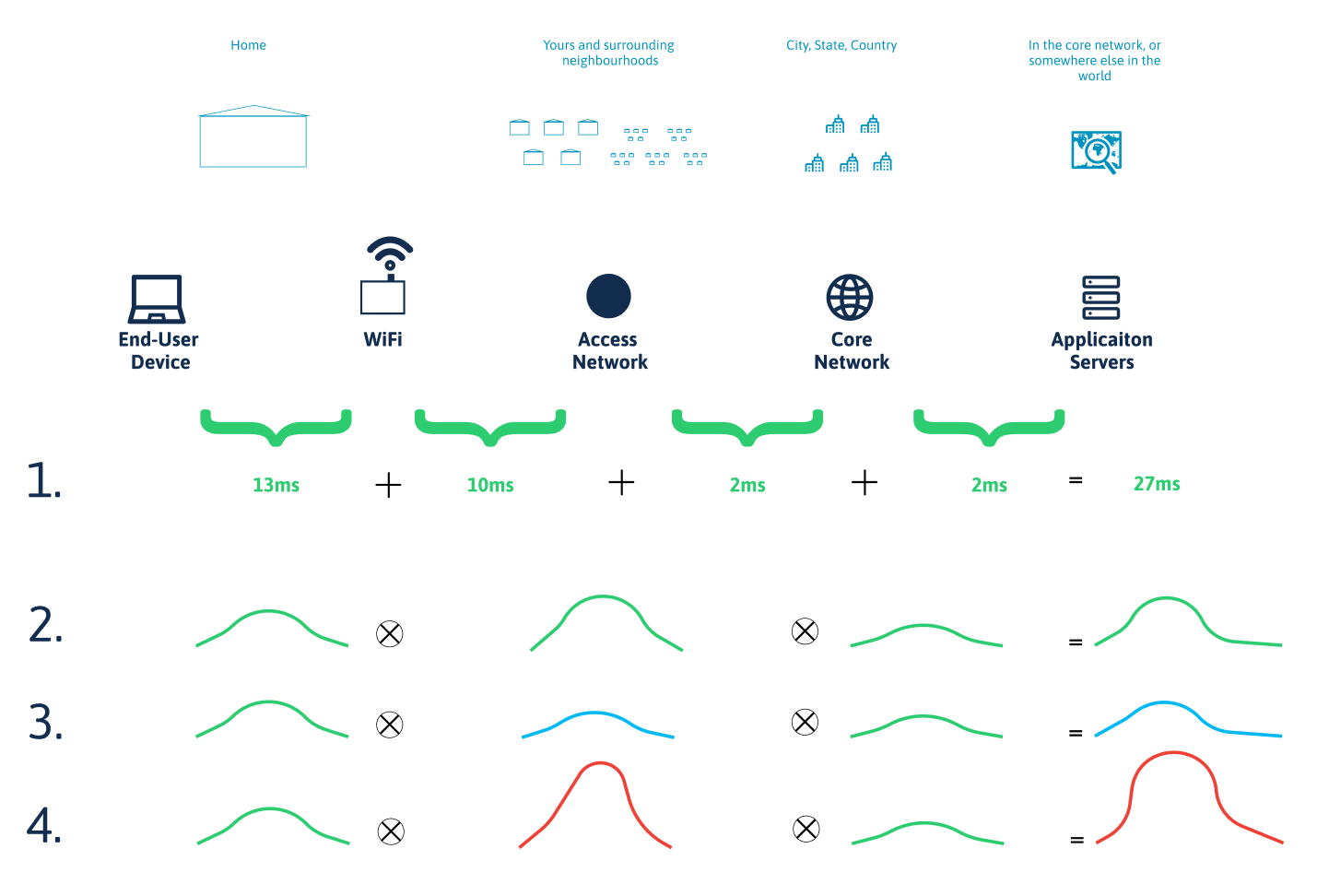

So, by substituting the data from the access and core network with the before and after calculations, we can understand the potential benefits of adding L4S to the access network. (Note: L4S addresses latency end-to-end, but this blog post looks at the incremental benefit of adding it to the access and core network).

The next figure shows how we took real life measurements, and substituted the before and after results from the L4S interop meeting with the corresponding real life segments.

.png)

For single packets, we can add the latency of each segment

Looking at network traffic over time, or using sampling to avoid overhead, we can use convolution of distributions.

For the L4S results, we can switch the latency distribution for the access network with the before

And after results

Some technicalities (for the interested, feel free to go to the next section)

To make the calculations, I took the key percentiles from the L4S interop results, used a method calledT-Digestto estimate the distribution. Then I convolved the distribution with 4 devices (from the homes of 3 Domos employees) WiFi Latency distribution, and Core-Servers Latency distributions from the homes peak hour (with regards to usage) in the last week. Then I took the end-to-end before and after results through the QoO framework with Domos Network Requirements for Video Conferencing, Online Gaming, Video Streaming and Interactive VR

The results is a before and after L4S optimization on the access network for 4 homes in a peak hour.

Picking devices

While most devices are able to run video conferencing, gaming, and video streaming smoothly, we wanted to investigate the network performance of devices that were experiencing difficulties. We selected devices from three Domos employees' homes in urban areas with low minimum latency to most servers, and varied WiFi conditions, where Domos had inferred poor quality due to network conditions in either a video conference, video streaming, or gaming session in the past week. These results should not be taken as an average of all devices, but as an indication of the improvement that can be gained for devices facing network quality issues.

Results

Impact on the devices

Domos considered 80% likelihood of a perfect outcome to be acceptable. Less than 60% is very bad.

The results presented in bar charts

Device 1:

Device 2:

Device 3:

Device 4:

The results presented in a mobile UI

.png?format=500w)

.png?format=500w)

Summary

L4S is a very promising standard to improve network quality for interactive applications. We have in many areas reached the bandwidth necessary to run the most common applications. Latency is increasingly the bottleneck, but improving it requires new techniques such as L4S. By applying L4S at the access and core network exclusively, we already see significant improvements for end-user devices. We expect the QoO score to increase further when L4S is applied to end-to-end! The QoO framework is a great tool to evaluate all dimensions of network quality holistically and present it in an understandable manner.

Appendix

Why the probabilities? Why can’t you just say the network is good or bad?

From a network perspective, which is the most useful perspective when addressing network optimizations, it is impossible to fully understand the impact of the network on end-user applications. Here's why:

You can’t measure the network perfectly. Network protocols are designed in a way that makes it impossible to know exactly how long it takes for packets to travel from one point to another. Even if we could measure networks perfectly, there are other factors to consider.

Applications optimize how they send data (the resolution, frames per second, compression techniques) based on the historical quality of the network. Many of them have their own homemade proprietary techniques which we would have to reverse engineer to know perfectly. Additionally, from the perspective of an application, latency within the device is indistinguishable from network latency, and it is not visible from the network.

There are differences internally in the applications as well. Background noise and how much you move matters for how much the video and audio can be compressed. If you have a constant background noise, the sound may take many times as much network bandwidth as if it is completely quiet. Hence, unless you know what content is sent (which Domos have no plans of ever knowing), it is impossible to know perfectly. There are also differences between different games, different video conference services, and so on.

There are also differences in the way that different applications handle data transmission. For example, the amount of background noise and movement can affect the level of compression used for audio and video. If there is constant background noise, it may take much more network bandwidth to transmit the sound than if it is completely quiet. Therefore, unless you know what content is sent (which Domos have no plans of ever knowing), it is impossible to know exactly how different applications will transmit data. There are also differences between different types of applications, such as games, video conference services, and so on.

We will never know perfectly, however, we can get very good at predicting the likelihood!

* You can of course further separate the network and add the transport network as a separate demarcation point. Or we could have gone into the details on different access technologies and whatnot. But that is besides the point for this blog post.