An introduction to L4S

November 24, 2022

L4S is a new standard for congestion control on the internet championed by Bob Briscoe, Greg White (CableLabs) and Koen De Schepper (Nokia). L4S stands for Low-Latency, Low-Loss, Scalable Throughput. The test results from the recent L4S interop event at IETF 115 in London are very impressive, delivering low latency over WiFi and 5G networks. This new technology is gaining momentum. It’s worth taking a good look at how it works.

Internet traffic consists of packets. Sometimes, parts of the internet are unable to quickly service all the packets that arrive because users send more packets than that part of the network can accommodate. Your home router can be one such bottleneck. When arrival rate exceeds service rate, packets form queues that introduce latency. Congestion control algorithms are tasked with figuring out how fast they can send their traffic in order to fully utilize the available capacity. The algorithms must do this in a way that doesn't cause excessive packet loss and is relatively fair to other users sharing the path. Classic TCP achieves this by reacting to packet losses by cutting its sending rate in half.

L4S defines new rules for the Internet congestion controls (Prague requirements), inspired by Data Center TCP (DCTCP). L4S Prague changes how network bottlenecks signal congestion, and how senders react to those signals, compared to classic TCP.

Where classic TCP relies on packet losses to detect congestion, L4S Prague uses a bit in the IP header as an explicit congestion signal. That bit is called Explicit Congestion Notification (ECN). Using ECN makes it possible to signal congestion early and often. A congestion signal can be sent before the queue begins to fill and without paying the costs of dropping packets. This is one of the major strengths of L4S. Early and frequent signals allow the sender to react less dramatically to congestion signals, so the transmission rate can be fine-tuned quicker and more accurately. This, in theory (and in testbeds), allows for both high throughput and low latency. As described up to now, L4S Prague resembles DCTCP, but L4S Prague goes beyond DCTCP with extra requirements to make it work on the Internet (Pacing, better RTT-independence, smaller bursts, controllable to lower rates, …).

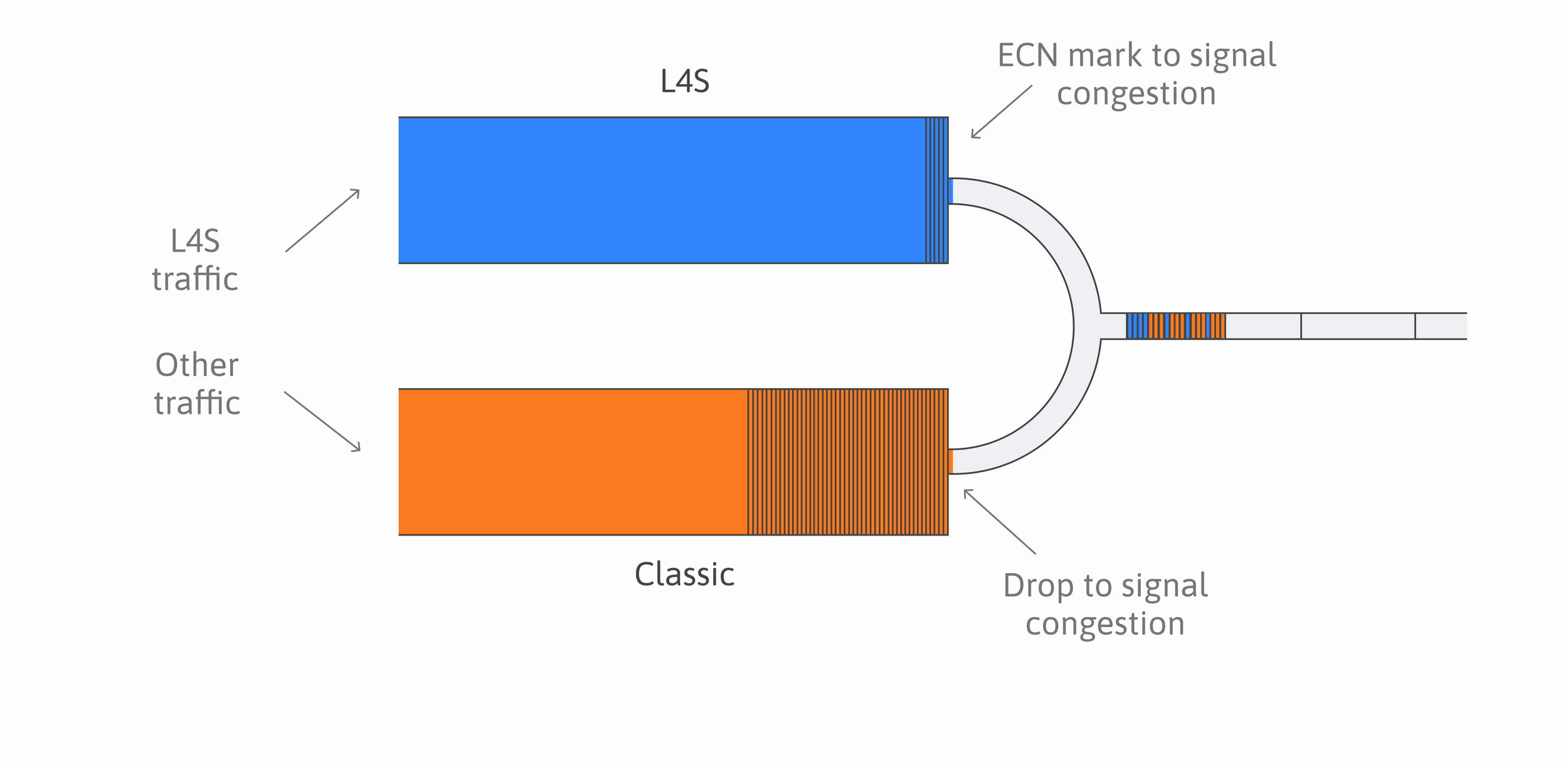

Just enabling ECN in the network to support the DCTCP ECN style response of Prague would wreak havoc on the internet because other TCP versions (already deployed) would get a similar high and frequent amount of ECN markings or packet losses, and react by reducing their throughput way too aggressively. It is necessary to apply a lower amount of drops or classic ECN marks to classic traffic, than you would for L4S Prague traffic. Apart from that, you need to separate L4S and Classic packets in different queues if you want to benefit from lower latency. When shared in the same queue, L4S would experience the same high latencies as classic TCP.

Nokia came up with a dual-queue setup to solve this problem. The L4S traffic is placed in one queue, and other traffic is placed in the other. That way we can use different rules for marking and dropping in each of the queues, and everyone can be happy. To sort the traffic into each of the two queues correctly, the L4S dual-queue relies on packets being tagged appropriately (setting the L4S-ID, which is the lower ECN bit, by the QUIC/TCP stack or by the applications for proprietary UDP protocols). The solution is patented by Nokia, but Nokia has waived the patent rights for use with the L4S standard.

.png)

The L4S dual queue

Low Latency DOCSIS (LLD) is the first technology to adopt the L4S dual queue implementation, and LLD products are already available. LLD combines L4S compatibility with methods for faster resource requests to provide a low latency version of the DOCSIS standard. DOCSIS is the dominant standard for delivering internet connectivity over coax cables.

So, L4S gets us low latency and high throughput. Surely those are all good things, right? What made L4S so controversial? Besides some areas where progress can still be made to improve performance, there is one real issue with L4S where special attention is needed. The original ECN implementations, according to the previous standard, had to mark ECN packets at the same rate as it drops non-ECN packets. If such legacy ECN AQMs are still active on a single queue shared by L4S and Classic traffic, and that queue becomes a bottleneck, L4S will outcompete Classic congestion controls. Luckily Classic ECN AQMs hasn’t had a lot of active deployment in the current and past Internet (the gains were not strong enough compared to traffic without ECN) and more and more people in the IETF consider deprecating this classic ECN approach to avoid making it a potential future problem.

In addition to the fairness issue, research has found that L4S can not achieve both high utilization and low latency on very unstable links. In fact, this is true for all congestion control algorithms and not just L4S. WiFi, 4G and 5G are perhaps the most obvious examples of varying-capacity link technologies. Several studies, conclude that latency spikes could be a significant issue on these technologies, even with L4S. The severity of the latency spike problem depends on how often, how rapidly, and how much the link capacity changes. The problem of latency spikes on variable-capacity links can be mitigated with better schedulers and more robust protocols, and by under-utilizing the link. L4S offers an opportunity to choose a low-latency configuration when that is desirable.

Deploying L4S comes with a couple of operational considerations. On unstable links like WiFi it may be beneficial to tune the L4S bottleneck based on user preference because of the trade-off between latency and utilization. In addition, it is necessary to police the L4S queue because some application could mark their traffic as L4S without responding appropriately to ECN feedback. The offending flow must then be de-marked and put in the classic queue.

To conclude, L4S has shown some very promising results. It opens the door to much lower latencies in the Internet. This will be an exciting space to follow in the coming months and years.

References:

[1] Cablelabs’ L4S testing: https://l4s.cablelabs.com/l4s-testing/README.html

[2] RFC3168 The Addition of Explicit Congestion Notification (ECN) to IP: https://datatracker.ietf.org/doc/html/rfc3168

[3] DualQ Coupled AQMs for Low Latency, Low Loss and Scalable Throughput (L4S): https://datatracker.ietf.org/doc/draft-ietf-tsvwg-aqm-dualq-coupled/24/

[4] IPR documents for DualQ: https://datatracker.ietf.org/ipr/search/?submit=draft&id=draft-ietf-tsvwg-aqm-dualq-coupled

[5] The congestion-notification conflict: https://lwn.net/Articles/783673/

[6] An Experimental Evaluation of Low Latency Congestion Control over mmWave links: https://witestlab.poly.edu/blog/tcp-mmwave/

[7] A Lower Bound on Latency Spikes for Capacity-Seeking Network Traffic: https://arxiv.org/abs/2111.00488